k8s管理节点安装过程:

1.配置镜像仓库

2.配置防火墙规则

3.安装软件包(maser)

4.镜像导入仓库

5.Tab检设置

6.安装代理软件包

7.配置master主机环境

8.使用kubeadm部署

9.验证安装结果

1.配置镜像仓库

本例中使用docker-distribution软件包来做镜像仓库(配置非常简单)。生产环境中一般使用harbor来做镜像仓库。docker-distribution提供的仓库功能非常简陋,使用http通信,没有访问认证功能,因此一般用于内网环境。其优点是安装方便使用简单。

yum install -y docker-distribution

systemctl enable --now docker-distribution

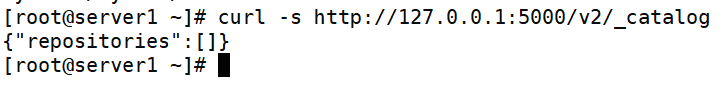

curl -s http://registry:5000/v2/_catalog

备注:因没有修改hosts文件,故上图示例在访问仓库时使用了ip地址的方式。

2.配置防火墙规则

3.安装软件包(maser)

yum makecache

yum install -y kubeadm kubelet kubectl docker-ce

mkdir -p /etc/docker

vim /etc/docker/daemon.json

{

"exec-opts":["native.cgroupdriver=systemd"],

"registry-mirrors":["http://registry:5000","https://hub-mirror.c.163.com"],

"insecure-registries":["192.168.1.30:5000","registry:5000"]

}4.镜像导入仓库

#查看安装kubernetes所需要的容器

kubeadm config images list

k8s.gcr.io/kube-apiserver:v1.22.5

k8s.gcr.io/kube-controller-manager:v1.22.5

k8s.gcr.io/kube-scheduler:v1.22.5

k8s.gcr.io/kube-proxy:v1.22.5

k8s.gcr.io/pause:3.5

k8s.gcr.io/etcd:3.5.0-0

k8s.gcr.io/coredns/coredns:v1.8.4将以上软件包下载好之后打包上传到master主机上。

docker load -i init/v1.22.5.tar.xz

docker images|while read i t _;do

[[ "${t}" == "TAG" ]] && continue

docker tag ${i}:${t} registry:5000/k8s/${i##*/}:${t}

docker push registry:5000/k8s/${i##*/}:${t}

docker rmi ${i}:${t} registry:5000/k8s/${i##*/}:${t}

donecurl -s http://registry:5000/v2/_catalog|python -m json.tool

{

"repositories": [

"k8s/coredns",

"k8s/etcd",

"k8s/kube-apiserver",

"k8s/kube-controller-manager",

"k8s/kube-proxy",

"k8s/kube-scheduler",

"k8s/pause"

]

}5.Tab检设置

source <(kubeadm completion bash|tee /etc/bash_completion.d/kubeadm)

source <(kubectl completion bash|tee /etc/bash_completion.d/kubectl)6.安装代理软件包

#k8s可以调用lvs进行负载均衡,但需要提前安装好软件包

yum install -y ipvsadm ipset7.配置master主机环境

for i in overlay br_netfilter;do

modprobe ${i}

echo "${i}" >>/etc/modules-load.d/containerd.conf

donecat >/etc/sysctl.d/99-kubernetes-cri.conf<<EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

sysctl --system8.使用kubeadm部署

kubeadm init --config=init/kubeadm-init.yaml --dry-runrm -rf /etc/kubernetes/tmp

kubeadm init --config=init/kubeadm-init.yaml |tee init/init.log

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config应答文件创建方式

#创建模板文件

kubeadm config print init-defaults > init.yaml

#查询kube proxy配置信息

kubeadm config print init-defaults --component-configs KubeProxyConfiguration

#查询kubeletConfiguration配置信息

kubeadm config print init-defaults --component-configs KubeletConfiguration

#查询当前版本信息(以yaml格式展示)

kubeadm version -o yaml

#编辑应答文件,生成完整的应答文件应答文件部分内容解读

12: advertiseAddress: 192.168.1.50 #管理节点的IP地址

15:criSocket: /var/run/dockershim.sock #Runtime的socket地址

17:name: master #管理节点的名称

30:imageRepository: registry:5000/k8s #私有仓库地址

32:kubernetesVersion: 1.22.5 #版本信息,镜像标签

35:podSubnet: 10.244.0.0/16 #pod地址段(新添加的信息)

36:serviceSubnet: 10.245.0.0/16 #service地址段

#在文件最后追加,启用IPVS模式

---

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

mode: ipvs

ipvs:

strictARP: true

#设置kubelet使用的Croup驱动模式为systemd

---

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

cgroupDriver: systemd9.验证安装结果

kubectl cluster-info

Kubernetes control plane is running at https://192.168.1.50:6443

CoreDNS is running at https://192.168.1.50:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 90s v1.22.5

评论